- Microsoft Copilots, built on OpenAI models, are its most important AI products.

- Internal audio reveals early development work on Microsoft’s Security Copilot service.

- In the audio, a Microsoft exec said the company had to “cherry pick” examples due to hallucinations.

To get a sense of the real potential and practical pitfalls of generative AI, look no further than Microsoft’s early work on its Security Copilot service.

The world’s largest software maker introduced Security Copilot in early 2023. It’s one of Microsoft’s most important new AI products, tapping into OpenAI’s GPT-4 and an in-house model to answer questions about cyberthreats in a similar style to ChatGPT.

The road to launching this was challenging but also included hopeful revelations about the power of this new technology, according to an internal Microsoft presentation from late 2023. Business Insider obtained an excerpt of the presentation, revealing some details about how this important AI product was created.

GPU supply problems

Originally, Microsoft was working on its own machine-learning models for security use cases, according to the presentation by Microsoft Security Research partner Lloyd Greenwald.

The initiative, involving petabytes of security data, stalled because of a lack of computing resources when “everybody in the company” was using Microsoft’s limited supply of GPUs to work with GPT-3, the predecessor to GPT-4, Greenwald explained.

Early GPT-4 access

Then, the software giant got early access to GPT-4 as a “tented project,” he said, according to the audio obtained by BI. That’s a term for a project to which Microsoft tightly restricts access.

At that point, Microsoft shifted focus away from its own models to see what it could in the cybersecurity space with GPT-4.

“We presented our initial explorations of GPT-4 to government customers to get their feel and we also presented it to external customers without saying what the model is that we were using,” Greenwald said.

The pitch

The pitch centered around the benefits of mostly using a single universal AI model rather than many individual models.

Microsoft still has several specific machine-learning models for solving specific problems, such as attack campaign attribution, compromised account detection, and supply chain attack detection, Greenwald said.

“The difference is if you have a large universal model or a foundation model that they are called now, like GPT-4, you can do all things with one model,” he added. “That’s how we pitched it to the government back then and then we showed them a little bit about what we were trying to do.”

Greenwald noted that the capabilities Microsoft initially showed the government were “childish compared to the level of sophistication” the company has achieved now.

Microsoft spokesman Frank Shaw said the meeting pertained to technology built on GPT 3.5 and is “irrelevant” to the current Security Copilot built on GPT-4.

“The technology discussed at the meeting was exploratory work that predated Security Copilot and was tested on simulations created from public data sets for the model evaluations, no customer data was used,” Shaw said. “Today, our Early Access Program customers regularly share their satisfaction with the latest version of Security Copilot.”

Cherry-picking and hallucinations

According to the audio obtained by BI, Microsoft started testing GPT-4’s security capabilities by showing the AI model security logs and seeing if it could parse the content and understand what was going on.

They would paste a Windows security log, for example, into GPT-4 and then prompt the model by telling it to be a “threat hunter” and find out what had happened, Greenwald said in the presentation.

This was without any extra training on specific security data — just OpenAI’s general model off the shelf. GPT-4 was able to understand this log, share some interesting things about what was in the log, and say whether there was anything malicious.

“Now this is in some ways a cherry-picked example, because it would also tell us things that just weren’t right at all,” Greenwald said. “Hallucination is a big problem with LLMs and there’s a lot we do at Microsoft to try to eliminate hallucinations and part of that is grounding it with real data, but this is just taking the model without grounding it with any data. The only thing we sent it was this log and asking it questions.”

“We had to cherry pick a little bit to get an example that looked good because it would stray and because it’s a stochastic model, it would give us different answers when we asked it the same questions,” he added. “It wasn’t that easy to get good answers.”

It’s unclear from the audio of the presentation whether Greenwald was saying that Microsoft used these cherry-picked examples during early demos to the government. BI asked the company specifically about this and it didn’t respond to that question.

“We work closely with customers to minimize the risk of hallucinations by grounding responses in customer data and always providing citations,” Microsoft’s Shaw said.

Digging in on training data

Microsoft also dug in to see where GPT-4 got the information it already seemed to know about cybersecurity topics such as logs, compromising situations, and threat intelligence.

Greenwald said that Microsoft’s experience from developing Github Copilot showed that OpenAI models were trained on open-source code. But these models were also trained on computer science papers, patent office data, and website crawls for 10 years.

“So there was reasonable data on security in there, it was all static in time, all security data back from prior to late 2021,” Greenwald explained.

Indicators of compromise

Greenwald also shared another example of the security questions Microsoft asked OpenAI’s models, saying “this is just what we demoed to the government,” according to the audio of his presentation.

Again, Microsoft told the AI model to be a “threat hunter, but this time gave it a different security log from a specific incident and asked it to explain what was going on and to identify any IOCs, or indicators of compromise. These are digital traces that cybercriminals leave inside computer networks during attacks.

The model was able to figure out the IOCs, what happened with the attack and how to remediate it — just by looking at the security log, Greenwald said. This output was from GPT 3.5, rather than the more advance GPT-4, he noted.

“We were able to present to the government this is from GPT 3.5 these are experiments on what we can do,” Greenwald added. “These answers are pretty good and pretty convincing but GPT-4 answers were even better.”

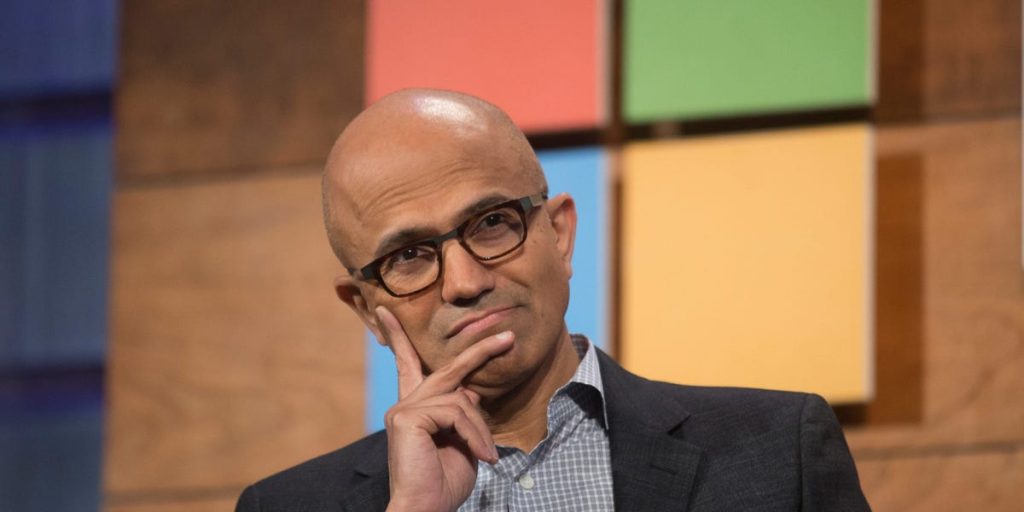

That’s what got CTO Kevin Scott, CEO Satya Nadella and many others at Microsoft excited, according to Greenwald.

A real product, with Microsoft data

The effort then moved out from the applied research organization under Eric Douglas and got an engineering team to try to turn this into what Greenwald called “something more like a real product.”

Microsoft has incorporated its own data into the Security Copilot product, which helps the company “ground” the system with more up-to-date and relevant information.

“We want to pull in security data, we don’t just want to just ask it questions based on what it was trained on, we have a lot of data in Microsoft, we have a lot of security products,” Greenwald said.

He cited Microsoft Sentinel as a cybersecurity product that has “connectors to all that data.” He also mentioned ServiceNow connectors, and information from Microsoft Defender, the company’s antivirus software, along with other sources of security data.

A person familiar with the project said the idea is to intercept responses from generative AI models and use the internal security data to point in the right direction, essentially creating more deterministic software to try to solve hallucination issues before the product is generally available, expected this summer.

When Microsoft initially rolled out Security Copilot in late March, the company said the service “doesn’t always get everything right” and noted that “AI-generated content can contain mistakes.”

It described Security Copilot as a “closed-loop learning system,” that gets feedback from users and improves over time. “As we continue to learn from these interactions, we are adjusting its responses to create more coherent, relevant and useful answers,” Microsoft said.

Do you work at Microsoft or have insight to share?

Contact Ashley Stewart via email ([email protected]), or send a secure message from a non-work device via Signal (+1-425-344-8242).

Read the full article here